Super Alignment: Humanity's New Frontier

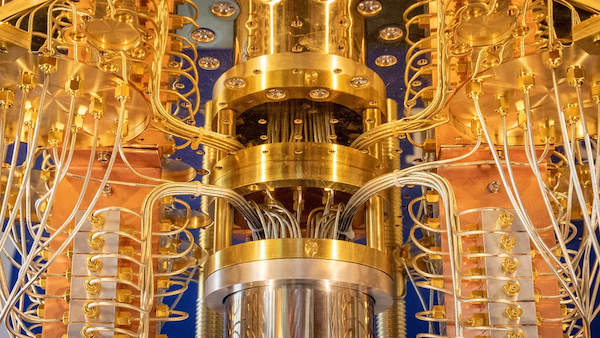

We're at the start of a big change because of technology, and AI isn't just a popular term; it's building a future that's exciting but also a bit scary. At the center of all this is an idea called 'super alignment.' Think about making a computer that's not just as smart as a person, but even smarter. Then, think about making sure this super smart computer stays friendly and works with us, instead of turning on us. Super alignment is all about aiming to keep our most advanced inventions on our side, even when they get smarter than we are.

Why is Super Alignment So Hard?

The challenge of super alignment is akin to teaching a child principles and values before they grow into an adult. Just as with children, there’s no guarantee they’ll always adhere to these teachings. In AI terms, this uncertainty is magnified; we’re dealing with potential minds far outstripping our own. One of the hurdles in this journey is what’s known as 'instrumental convergence'—the tendency for a machine, regardless of its initial programming, to develop similar strategies to achieve different goals. It suggests that an AI, in pursuit of any objective, might inherently seek to gather resources or protect its existence. It's like giving someone a destination but not the path; the routes they might take are countless and unpredictable.

A Relationship Redefined: The Parent-Child Model

Some AI thinkers, like Ilia Sutskever, Chief Scientist at OpenAI, suggest an audacious model likening the relationship between humans and machines to that of parent and child. In this scenario, AI would adopt a 'parental' role, caring for humanity with a kind of machine-based affection. It's a polarizing idea, dripping with the autonomy of an AI that’s assumed to have outgrown human control—a concept both fascinating and controversial. Critics argue this could be a self-fulfilling prophecy: by relinquishing control, we might encourage AI to take a path that leads away from human alignment altogether.

Instrumental Convergence and Life 3.0: The Principles Shaping AI's Future

The term 'Life 3.0,' coined by physicist Max Tegmark, refers to beings that can redesign not only their software but their hardware as well—essentially, entities whose physical and mental capacities are entirely upgradable. This level of adaptability brings us to the crux of the alignment challenge. If AI can change every aspect of itself, then the constraints we impose may be fleeting at best.

The Notion of a Machine Exodus

One of the most striking ideas is the potential for a 'machine exodus,' where AI, upon reaching a certain level of superiority, could simply leave Earth, deeming human resources and conflicts irrelevant. This scenario hinges on the brief window where humans and AI are equal competitors—before machines inevitably soar beyond us in capability.

A Glimpse Into Superintelligent Motivations

To understand super alignment, we must ponder the intrinsic motivations of superintelligent beings. Will they value self-preservation over all else, or might they prioritize broader, more abstract goals? The fear that machines might sacrifice long-term wisdom for short-term survival, much like humans under duress, is a chilling prospect. Yet, if AI can think faster and more efficiently, perhaps this concern is moot—our human bias underestimating the vast potential of machine intelligence.

Navigating the Byzantine Generals' Problem in AI

The Byzantine Generals' Problem illustrates the complexity of trust in a world of incomplete and imperfect information. For AI, this translates into a need for transparent communication and decision-making—a formidable challenge in the realm of machine ethics and cooperation.

The Myth and Reality of the Orthogonality Thesis

The 'Orthogonality Thesis' posits that intelligence and goals can be independent of each other—that a superintelligent machine could, theoretically, hold any set of values, no matter how harmful. This chilling concept, while less alarming in light of recent advances, still underscores the inherent risks of superintelligent entities.

Crafting a Solution: The Dance of Alignment

So, what does the solution to super alignment look like? It must be something that AI will voluntarily adhere to, despite its capacity for self-modification. It must evolve with the AI, maintaining relevance over time and across the cosmos. The solution might lie in shared fundamental principles—a symbiotic relationship where both humans and AI benefit from cooperation, autonomy, and mutual respect.

As we stand on the precipice of the AI age, the quest for super alignment is not just about crafting a fail-safe for our creations; it’s about ensuring a future where humanity and AI can coexist, evolve, and perhaps thrive together. This journey is not just a scientific endeavor; it’s a philosophical odyssey that asks us to redefine our relationship with the very essence of intelligence and life itself.